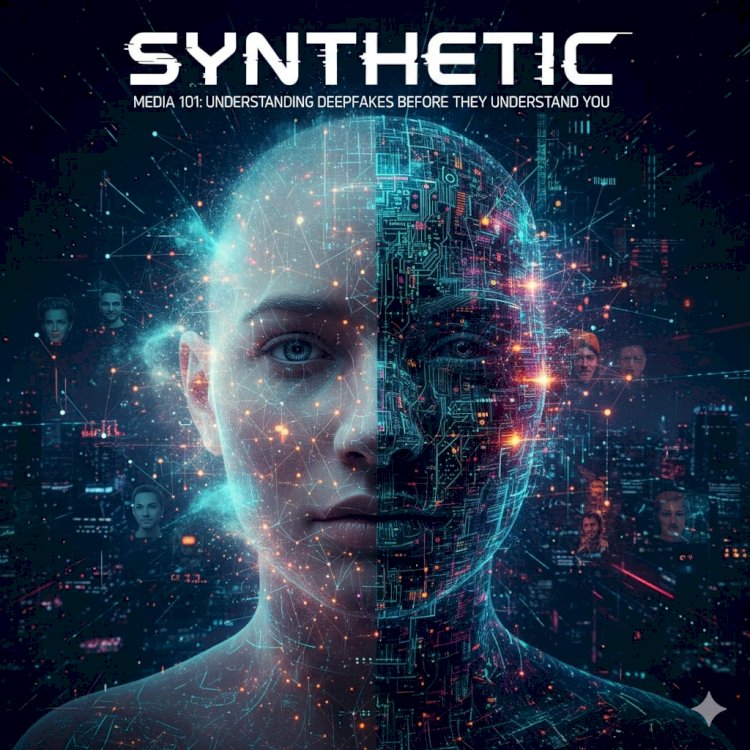

Synthetic Media 101: Understanding Deepfakes Before They Understand You

By Dr. Pooyan Ghamari, Swiss Economist and Visionary

The Day the President Declared War… While Sleeping

In 2022, a convincingly real video surfaced of the Ukrainian President Volodymyr Zelenskyy telling his troops to lay down their arms. It spread like wildfire across Telegram and TikTok before anyone realized it was fake. That was the moment the world woke up: deepfakes had graduated from meme swaps and Hollywood stunts to weapons-grade disinformation.

Welcome to Synthetic Media 101. Class is now in session.

First, the Boring (But Essential) Definition

Synthetic media is any content — audio, video, image, or text — that is partially or wholly generated or manipulated by artificial intelligence. Deepfakes are the most infamous subset: hyper-realistic face and voice swaps powered by generative adversarial networks (GANs) and diffusion models.

Think of it as Photoshop on steroids that no longer needs a human designer.

From Darth Vader Cat Videos to Financial Chaos

The technology timeline is accelerating faster than regulators can spell “blockchain”:

- 2017 – FaceSwap Reddit experiments

- 2019 – “DeepNude” app (shut down in 72 hours)

- 2021 – Tom Cruise TikTok deepfakes fool millions

- 2023 – AI voice cloning scams steal millions from companies (one bank transferred $35 million after a 3-minute call with a deepfaked CFO voice)

- 2025 – We’re here. Your phone can now generate a 4K deepfake of anyone saying anything in real time.

The Three-Headed Monster: Technology, Economics, and Psychology

- Technology Training a decent voice clone now costs less than $500 and takes a few hours if you have 5–10 minutes of clean target audio. Video is getting cheaper by the month. Open-source tools like Roop, DeepFaceLab, and SadTalker are in the hands of teenagers.

- Economics The cost of deception is plummeting while the reward (political influence, stock manipulation, extortion, revenge porn) is skyrocketing. Basic supply-demand logic says we’re in trouble.

- Psychology Humans are wired for “seeing is believing.” Even when we’re told a video is fake, our emotional brain often overrules the rational one. This is called the “liar’s dividend”: the more deepfakes exist, the easier it becomes for real scandals to be dismissed as “fake.”

The Corporate Nightmare Scenario (That Already Happened)

Imagine you’re the CFO of a Fortune 500 company. Friday 4:55 p.m. You receive an urgent video call from what appears to be your CEO — same face, voice, background office in Zurich, even the little twitch in his left eye. He says the company is finalizing a secret acquisition and needs an immediate wire of €200 million to a Hungarian law firm. “Tell no one, sign nothing, just do it.”

It happened in 2020 to a UK energy firm ($243,000 lost). By 2024 the amounts are in eight figures.

So… Are We Doomed?

Not yet. But hope is not a strategy.

Detection Tools (The Current Score: Humans 0 – AI 1.5)

- Forensic: pupil reflection inconsistencies, unnatural blinking patterns, audio spectrogram artifacts

- Blockchain watermarking (Adobe Content Authenticity Initiative, Microsoft, Intel’s “FakeCatcher”)

- Reverse-image search on steroids (Deepware, Reality Defender)

Problem: Detection always lags creation. Today’s best detectors are 90–95% accurate on yesterday’s deepfakes. Tomorrow’s models laugh at them.

The Nuclear Options Nobody Wants to Use

- Mandatory cryptographic signing of all legitimate media (impossible at scale)

- Preemptive biometric watermarks embedded in every camera and microphone (hello, privacy apocalypse)

- Licensing requirements for generative AI above a certain capability threshold (good luck enforcing globally)

The Economist’s Answer: Raise the Price of Lying

As a Swiss economist, I rarely trust technology or regulation alone. Markets, however, respond beautifully to incentives.

Three ideas that actually scale:

- Reputation-Backed Media Platforms and news outlets voluntarily sign content with private keys. Unsigned or broken-signature media gets a visible yellow “unverified” banner. Trust becomes measurable and monetizable.

- Liability Shift Make the platform that amplifies harmful synthetic media jointly liable (like copyright). Watch moderation budgets explode overnight.

- Insurance Markets for Identity Let individuals and companies buy “deepfake insurance.” The underwriting process will force KYC on steroids and create the first real economic penalty for careless data leaks (because your voiceprints and face maps will determine your premium).

Your Personal Defense Toolkit (2025 Edition)

- Never make high-stakes decisions based on unsolicited video/audio alone. Use pre-agreed code phrases for emergencies.

- Record short “canary” videos annually saying “If I ever ask you to wire money on video, ignore me — call this number instead.”

- Freeze your credit and enable voice biometrics that require liveness detection at your bank.

- Assume anything emotionally charged that arrives without context is fake until proven otherwise.

The Paradox We Must Embrace

The same models that can fake a president surrendering can also resurrect a deceased parent for one last conversation, let artists collaborate with their heroes across time, and give voice to the voiceless (literally — ALS patients using real-time synthetic speech).

We don’t need to ban the fire. We need to invent fire alarms, fire departments, and building codes — fast.

Because the deepfakes are already learning our mannerisms, our weaknesses, and our trust.

The question is no longer “Can they fool us?” The question is: “When they do, will we still recognize the truth?”

Class dismissed.

content-team

content-team